又切換模型, 隨手記錄 log, 在這個 model 裡有2個mode, deconv 與 interpolate_conv, 目前程式是使用 deconv, 是會有 staircase 產生的風險.

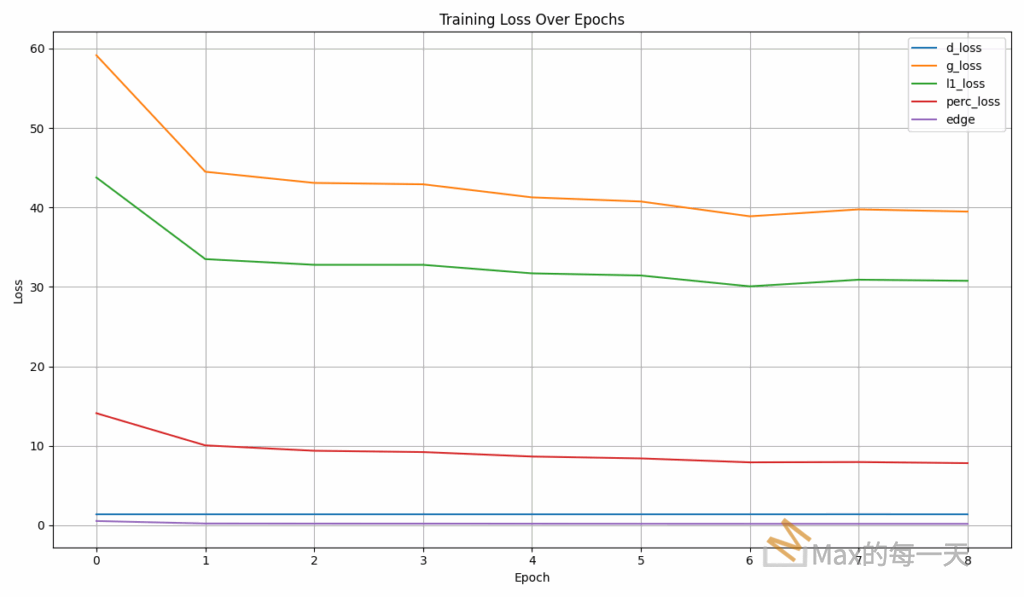

首輪 figure:

首輪 log:

Epoch: [ 0], Batch: [ 0/ 425] | Total Time: 4s

d_loss: 1.3887, g_loss: 107.2229, const_loss: 0.0033, l1_loss: 79.2702, fm_loss: 0.1006, perc_loss: 25.3502, edge: 1.8048

Checkpoint step 100 reached, but saving starts after step 200.

Epoch: [ 0], Batch: [ 100/ 425] | Total Time: 3m 54s

d_loss: 1.3871, g_loss: 48.5036, const_loss: 0.0020, l1_loss: 35.4839, fm_loss: 0.0295, perc_loss: 12.0673, edge: 0.2280

Epoch: [ 0], Batch: [ 200/ 425] | Total Time: 7m 51s

d_loss: 1.3868, g_loss: 48.2698, const_loss: 0.0023, l1_loss: 35.7141, fm_loss: 0.0273, perc_loss: 11.6003, edge: 0.2330

Epoch: [ 0], Batch: [ 300/ 425] | Total Time: 11m 46s

d_loss: 1.3872, g_loss: 47.6524, const_loss: 0.0020, l1_loss: 35.2970, fm_loss: 0.0260, perc_loss: 11.4042, edge: 0.2303

Epoch: [ 0], Batch: [ 400/ 425] | Total Time: 15m 44s

d_loss: 1.3869, g_loss: 44.1047, const_loss: 0.0027, l1_loss: 33.0810, fm_loss: 0.0198, perc_loss: 10.0972, edge: 0.2112

--- End of Epoch 0 --- Time: 1000.8s ---

LR Scheduler stepped. Current LR G: 0.000250, LR D: 0.000250

Epoch: [ 1], Batch: [ 0/ 425] | Total Time: 16m 43s

d_loss: 1.3867, g_loss: 46.1493, const_loss: 0.0028, l1_loss: 34.5571, fm_loss: 0.0200, perc_loss: 10.6508, edge: 0.2258

Epoch: [ 1], Batch: [ 100/ 425] | Total Time: 20m 38s

d_loss: 1.3868, g_loss: 44.0673, const_loss: 0.0020, l1_loss: 33.1534, fm_loss: 0.0204, perc_loss: 9.9712, edge: 0.2274

Epoch: [ 1], Batch: [ 200/ 425] | Total Time: 24m 38s

d_loss: 1.3873, g_loss: 43.9227, const_loss: 0.0021, l1_loss: 32.9749, fm_loss: 0.0208, perc_loss: 10.0186, edge: 0.2134

Epoch: [ 1], Batch: [ 300/ 425] | Total Time: 28m 33s

d_loss: 1.3868, g_loss: 42.5464, const_loss: 0.0020, l1_loss: 32.2327, fm_loss: 0.0195, perc_loss: 9.3895, edge: 0.2098

Epoch: [ 1], Batch: [ 400/ 425] | Total Time: 32m 34s

d_loss: 1.3869, g_loss: 45.7910, const_loss: 0.0022, l1_loss: 34.5848, fm_loss: 0.0191, perc_loss: 10.2631, edge: 0.2289

--- End of Epoch 1 --- Time: 1009.3s ---

LR Scheduler stepped. Current LR G: 0.000248, LR D: 0.000248

Epoch: [ 2], Batch: [ 0/ 425] | Total Time: 33m 32s

d_loss: 1.3867, g_loss: 42.1055, const_loss: 0.0022, l1_loss: 32.0300, fm_loss: 0.0187, perc_loss: 9.1529, edge: 0.2089

Epoch: [ 2], Batch: [ 100/ 425] | Total Time: 37m 27s

d_loss: 1.3867, g_loss: 42.8466, const_loss: 0.0021, l1_loss: 32.5180, fm_loss: 0.0182, perc_loss: 9.4029, edge: 0.2126

Epoch: [ 2], Batch: [ 200/ 425] | Total Time: 41m 23s

d_loss: 1.3869, g_loss: 41.5604, const_loss: 0.0027, l1_loss: 31.5670, fm_loss: 0.0162, perc_loss: 9.0829, edge: 0.1988

Epoch: [ 2], Batch: [ 300/ 425] | Total Time: 45m 21s

d_loss: 1.3873, g_loss: 45.1314, const_loss: 0.0021, l1_loss: 34.3541, fm_loss: 0.0171, perc_loss: 9.8493, edge: 0.2161

Epoch: [ 2], Batch: [ 400/ 425] | Total Time: 49m 16s

d_loss: 1.3907, g_loss: 43.7910, const_loss: 0.0022, l1_loss: 33.4412, fm_loss: 0.0174, perc_loss: 9.4180, edge: 0.2193

--- End of Epoch 2 --- Time: 1001.9s ---

LR Scheduler stepped. Current LR G: 0.000247, LR D: 0.000247

Epoch: [ 3], Batch: [ 0/ 425] | Total Time: 50m 14s

d_loss: 1.3878, g_loss: 42.1817, const_loss: 0.0022, l1_loss: 32.6144, fm_loss: 0.0170, perc_loss: 8.6532, edge: 0.2020

Epoch: [ 3], Batch: [ 100/ 425] | Total Time: 54m 15s

d_loss: 1.3872, g_loss: 44.4804, const_loss: 0.0024, l1_loss: 33.7744, fm_loss: 0.0178, perc_loss: 9.7727, edge: 0.2202

Epoch: [ 3], Batch: [ 200/ 425] | Total Time: 58m 10s

d_loss: 1.3867, g_loss: 42.0870, const_loss: 0.0020, l1_loss: 32.1636, fm_loss: 0.0152, perc_loss: 9.0124, edge: 0.2008

Epoch: [ 3], Batch: [ 300/ 425] | Total Time: 1h 2m 11s

d_loss: 1.3883, g_loss: 41.9954, const_loss: 0.0017, l1_loss: 31.8905, fm_loss: 0.0145, perc_loss: 9.1924, edge: 0.2035

Epoch: [ 3], Batch: [ 400/ 425] | Total Time: 1h 6m 6s

d_loss: 1.3868, g_loss: 43.8342, const_loss: 0.0018, l1_loss: 33.4607, fm_loss: 0.0168, perc_loss: 9.4464, edge: 0.2156

--- End of Epoch 3 --- Time: 1016.7s ---

LR Scheduler stepped. Current LR G: 0.000244, LR D: 0.000244

Epoch: [ 4], Batch: [ 0/ 425] | Total Time: 1h 7m 10s

d_loss: 1.3867, g_loss: 40.6623, const_loss: 0.0023, l1_loss: 31.2383, fm_loss: 0.0141, perc_loss: 8.5227, edge: 0.1920

Epoch: [ 4], Batch: [ 100/ 425] | Total Time: 1h 11m 5s

d_loss: 1.3868, g_loss: 41.5975, const_loss: 0.0021, l1_loss: 31.8086, fm_loss: 0.0138, perc_loss: 8.8737, edge: 0.2064

Epoch: [ 4], Batch: [ 200/ 425] | Total Time: 1h 15m 0s

d_loss: 1.3869, g_loss: 42.8209, const_loss: 0.0018, l1_loss: 32.8971, fm_loss: 0.0157, perc_loss: 9.0095, edge: 0.2039

Epoch: [ 4], Batch: [ 300/ 425] | Total Time: 1h 19m 0s

d_loss: 1.3867, g_loss: 40.9848, const_loss: 0.0020, l1_loss: 31.7265, fm_loss: 0.0150, perc_loss: 8.3516, edge: 0.1969

Epoch: [ 4], Batch: [ 400/ 425] | Total Time: 1h 22m 55s

d_loss: 1.3873, g_loss: 40.2710, const_loss: 0.0017, l1_loss: 30.8541, fm_loss: 0.0139, perc_loss: 8.5166, edge: 0.1917

--- End of Epoch 4 --- Time: 1002.4s ---

LR Scheduler stepped. Current LR G: 0.000241, LR D: 0.000241

Epoch: [ 5], Batch: [ 0/ 425] | Total Time: 1h 23m 53s

d_loss: 1.3869, g_loss: 40.3556, const_loss: 0.0018, l1_loss: 31.2933, fm_loss: 0.0123, perc_loss: 8.1729, edge: 0.1824

Epoch: [ 5], Batch: [ 100/ 425] | Total Time: 1h 27m 49s

d_loss: 1.3868, g_loss: 37.1562, const_loss: 0.0018, l1_loss: 28.7403, fm_loss: 0.0126, perc_loss: 7.5385, edge: 0.1701

Epoch: [ 5], Batch: [ 200/ 425] | Total Time: 1h 31m 44s

d_loss: 1.3868, g_loss: 42.0820, const_loss: 0.0018, l1_loss: 32.4558, fm_loss: 0.0137, perc_loss: 8.7140, edge: 0.2038

Epoch: [ 5], Batch: [ 300/ 425] | Total Time: 1h 35m 39s

d_loss: 1.3876, g_loss: 41.8540, const_loss: 0.0018, l1_loss: 32.1257, fm_loss: 0.0130, perc_loss: 8.8235, edge: 0.1972

Epoch: [ 5], Batch: [ 400/ 425] | Total Time: 1h 39m 34s

d_loss: 1.3872, g_loss: 42.2984, const_loss: 0.0021, l1_loss: 32.5657, fm_loss: 0.0141, perc_loss: 8.8301, edge: 0.1936

--- End of Epoch 5 --- Time: 998.9s ---

LR Scheduler stepped. Current LR G: 0.000236, LR D: 0.000236

Epoch: [ 6], Batch: [ 0/ 425] | Total Time: 1h 40m 32s

d_loss: 1.3871, g_loss: 38.3635, const_loss: 0.0019, l1_loss: 29.6556, fm_loss: 0.0116, perc_loss: 7.8188, edge: 0.1827

Epoch: [ 6], Batch: [ 100/ 425] | Total Time: 1h 44m 30s

d_loss: 1.3868, g_loss: 36.9271, const_loss: 0.0015, l1_loss: 28.6191, fm_loss: 0.0117, perc_loss: 7.4322, edge: 0.1698

Epoch: [ 6], Batch: [ 200/ 425] | Total Time: 1h 48m 25s

d_loss: 1.3869, g_loss: 40.5806, const_loss: 0.0019, l1_loss: 31.2277, fm_loss: 0.0137, perc_loss: 8.4474, edge: 0.1971

Epoch: [ 6], Batch: [ 300/ 425] | Total Time: 1h 52m 20s

d_loss: 1.3872, g_loss: 37.3803, const_loss: 0.0015, l1_loss: 29.0808, fm_loss: 0.0117, perc_loss: 7.4258, edge: 0.1677

Epoch: [ 6], Batch: [ 400/ 425] | Total Time: 1h 56m 15s

d_loss: 1.3870, g_loss: 41.1677, const_loss: 0.0016, l1_loss: 31.7574, fm_loss: 0.0139, perc_loss: 8.5066, edge: 0.1952

--- End of Epoch 6 --- Time: 1001.7s ---

LR Scheduler stepped. Current LR G: 0.000232, LR D: 0.000232

Epoch: [ 7], Batch: [ 0/ 425] | Total Time: 1h 57m 13s

d_loss: 1.3867, g_loss: 40.5463, const_loss: 0.0018, l1_loss: 31.6367, fm_loss: 0.0132, perc_loss: 8.0234, edge: 0.1784

Epoch: [ 7], Batch: [ 100/ 425] | Total Time: 2h 1m 9s

d_loss: 1.4018, g_loss: 39.6393, const_loss: 0.0014, l1_loss: 30.8184, fm_loss: 0.0130, perc_loss: 7.9361, edge: 0.1775

Epoch: [ 7], Batch: [ 200/ 425] | Total Time: 2h 5m 4s

d_loss: 1.3871, g_loss: 40.0493, const_loss: 0.0016, l1_loss: 31.2954, fm_loss: 0.0118, perc_loss: 7.8749, edge: 0.1728

Epoch: [ 7], Batch: [ 300/ 425] | Total Time: 2h 9m 5s

d_loss: 1.3870, g_loss: 40.0854, const_loss: 0.0014, l1_loss: 31.2887, fm_loss: 0.0121, perc_loss: 7.9085, edge: 0.1819

Epoch: [ 7], Batch: [ 400/ 425] | Total Time: 2h 13m 0s

d_loss: 1.3868, g_loss: 38.4497, const_loss: 0.0018, l1_loss: 29.4812, fm_loss: 0.0127, perc_loss: 8.0730, edge: 0.1881

--- End of Epoch 7 --- Time: 1011.8s ---

LR Scheduler stepped. Current LR G: 0.000226, LR D: 0.000226

Epoch: [ 8], Batch: [ 0/ 425] | Total Time: 2h 14m 5s

d_loss: 1.3867, g_loss: 40.3691, const_loss: 0.0015, l1_loss: 31.3992, fm_loss: 0.0129, perc_loss: 8.0733, edge: 0.1895

Epoch: [ 8], Batch: [ 100/ 425] | Total Time: 2h 18m 5s

d_loss: 1.3868, g_loss: 39.6883, const_loss: 0.0014, l1_loss: 30.9755, fm_loss: 0.0144, perc_loss: 7.8100, edge: 0.1942

Epoch: [ 8], Batch: [ 200/ 425] | Total Time: 2h 22m 5s

d_loss: 1.3869, g_loss: 38.9455, const_loss: 0.0013, l1_loss: 30.4862, fm_loss: 0.0115, perc_loss: 7.5859, edge: 0.1678

Epoch: [ 8], Batch: [ 300/ 425] | Total Time: 2h 26m 5s

d_loss: 1.3868, g_loss: 38.9105, const_loss: 0.0015, l1_loss: 30.2053, fm_loss: 0.0122, perc_loss: 7.8220, edge: 0.1766

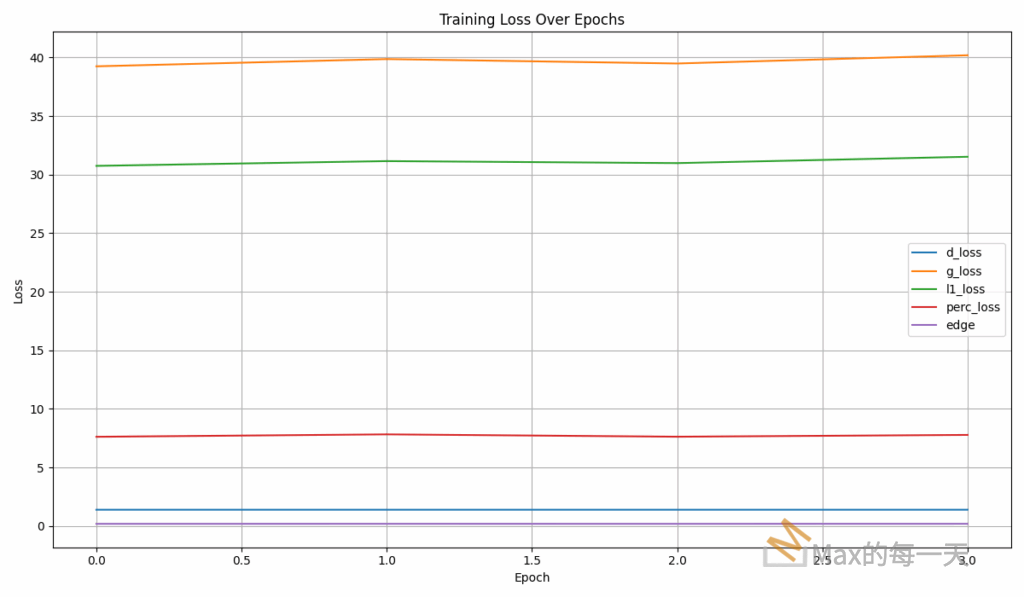

from checkpoint 40 to 42:

log:

Model 40 loaded successfully

unpickled total 8072 examples

Starting training from epoch 0/39...

Epoch: [ 0], Batch: [ 0/ 425] | Total Time: 4s

d_loss: 1.3870, g_loss: 40.7028, const_loss: 0.0017, l1_loss: 31.9401, fm_loss: 0.0129, perc_loss: 7.8705, edge: 0.1848

Checkpoint step 100 reached, but saving starts after step 200.

Epoch: [ 0], Batch: [ 100/ 425] | Total Time: 4m 1s

d_loss: 1.3873, g_loss: 37.1872, const_loss: 0.0011, l1_loss: 29.2400, fm_loss: 0.0101, perc_loss: 7.0855, edge: 0.1577

Epoch: [ 0], Batch: [ 200/ 425] | Total Time: 8m 4s

d_loss: 1.3868, g_loss: 39.8605, const_loss: 0.0013, l1_loss: 31.1717, fm_loss: 0.0118, perc_loss: 7.8002, edge: 0.1826

Epoch: [ 0], Batch: [ 300/ 425] | Total Time: 12m 2s

d_loss: 1.3873, g_loss: 40.3438, const_loss: 0.0014, l1_loss: 31.3573, fm_loss: 0.0131, perc_loss: 8.0812, edge: 0.1980

Epoch: [ 0], Batch: [ 400/ 425] | Total Time: 16m 2s

d_loss: 1.3870, g_loss: 38.1177, const_loss: 0.0016, l1_loss: 30.0107, fm_loss: 0.0119, perc_loss: 7.2275, edge: 0.1732

--- End of Epoch 0 --- Time: 1018.5s ---

LR Scheduler stepped. Current LR G: 0.000240, LR D: 0.000240

Epoch: [ 1], Batch: [ 0/ 425] | Total Time: 17m 0s

d_loss: 1.3868, g_loss: 40.8472, const_loss: 0.0016, l1_loss: 32.0174, fm_loss: 0.0122, perc_loss: 7.9452, edge: 0.1779

Epoch: [ 1], Batch: [ 100/ 425] | Total Time: 20m 58s

d_loss: 1.3868, g_loss: 39.2715, const_loss: 0.0010, l1_loss: 30.7872, fm_loss: 0.0128, perc_loss: 7.5811, edge: 0.1965

Epoch: [ 1], Batch: [ 200/ 425] | Total Time: 25m 2s

d_loss: 1.3872, g_loss: 38.7685, const_loss: 0.0013, l1_loss: 30.1408, fm_loss: 0.0113, perc_loss: 7.7513, edge: 0.1710

Epoch: [ 1], Batch: [ 300/ 425] | Total Time: 28m 59s

d_loss: 1.3869, g_loss: 38.4651, const_loss: 0.0013, l1_loss: 30.1492, fm_loss: 0.0109, perc_loss: 7.4366, edge: 0.1742

Epoch: [ 1], Batch: [ 400/ 425] | Total Time: 33m 3s

d_loss: 1.3869, g_loss: 41.9479, const_loss: 0.0015, l1_loss: 32.6564, fm_loss: 0.0121, perc_loss: 8.3880, edge: 0.1970

--- End of Epoch 1 --- Time: 1020.7s ---

LR Scheduler stepped. Current LR G: 0.000239, LR D: 0.000239

Epoch: [ 2], Batch: [ 0/ 425] | Total Time: 34m 1s

d_loss: 1.3868, g_loss: 38.6342, const_loss: 0.0014, l1_loss: 30.2853, fm_loss: 0.0114, perc_loss: 7.4776, edge: 0.1655

Epoch: [ 2], Batch: [ 100/ 425] | Total Time: 37m 59s

d_loss: 1.3869, g_loss: 38.2602, const_loss: 0.0011, l1_loss: 30.1447, fm_loss: 0.0112, perc_loss: 7.2297, edge: 0.1806

Epoch: [ 2], Batch: [ 200/ 425] | Total Time: 41m 56s

d_loss: 1.3869, g_loss: 37.8839, const_loss: 0.0015, l1_loss: 29.7314, fm_loss: 0.0113, perc_loss: 7.2757, edge: 0.1711

Epoch: [ 2], Batch: [ 300/ 425] | Total Time: 45m 59s

d_loss: 1.3868, g_loss: 42.3750, const_loss: 0.0013, l1_loss: 32.9836, fm_loss: 0.0125, perc_loss: 8.4817, edge: 0.2031

Epoch: [ 2], Batch: [ 400/ 425] | Total Time: 49m 56s

d_loss: 1.3868, g_loss: 40.2631, const_loss: 0.0013, l1_loss: 31.7473, fm_loss: 0.0117, perc_loss: 7.6305, edge: 0.1794

--- End of Epoch 2 --- Time: 1013.8s ---

LR Scheduler stepped. Current LR G: 0.000237, LR D: 0.000237

Epoch: [ 3], Batch: [ 0/ 425] | Total Time: 50m 55s

d_loss: 1.3873, g_loss: 39.7065, const_loss: 0.0014, l1_loss: 31.3875, fm_loss: 0.0112, perc_loss: 7.4304, edge: 0.1831

Epoch: [ 3], Batch: [ 100/ 425] | Total Time: 54m 53s

d_loss: 1.3873, g_loss: 41.6738, const_loss: 0.0015, l1_loss: 32.4121, fm_loss: 0.0125, perc_loss: 8.3621, edge: 0.1929

Epoch: [ 3], Batch: [ 200/ 425] | Total Time: 58m 52s

d_loss: 1.3868, g_loss: 39.1758, const_loss: 0.0012, l1_loss: 30.7618, fm_loss: 0.0110, perc_loss: 7.5289, edge: 0.1800

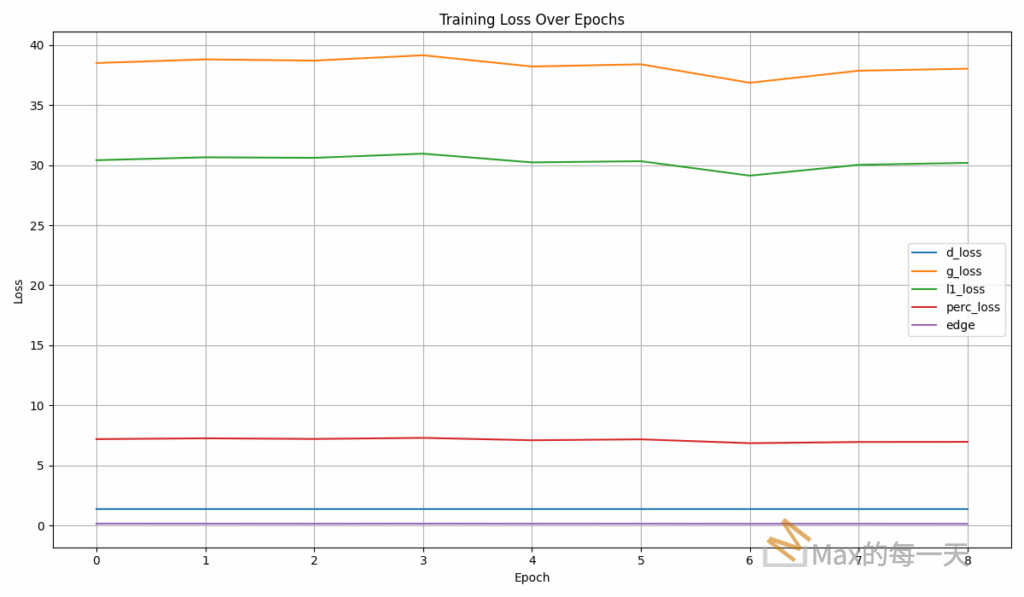

from 44 to 46

log:

Epoch: [ 0], Batch: [ 0/ 425] | Total Time: 4s

d_loss: 1.3876, g_loss: 39.9197, const_loss: 0.0016, l1_loss: 31.5896, fm_loss: 0.0109, perc_loss: 7.4445, edge: 0.1803

Checkpoint step 100 reached, but saving starts after step 200.

Epoch: [ 0], Batch: [ 100/ 425] | Total Time: 3m 43s

d_loss: 1.3871, g_loss: 37.0317, const_loss: 0.0009, l1_loss: 29.2118, fm_loss: 0.0094, perc_loss: 6.9423, edge: 0.1744

Epoch: [ 0], Batch: [ 200/ 425] | Total Time: 7m 38s

d_loss: 1.3868, g_loss: 38.6627, const_loss: 0.0011, l1_loss: 30.6234, fm_loss: 0.0103, perc_loss: 7.1660, edge: 0.1691

Epoch: [ 0], Batch: [ 300/ 425] | Total Time: 11m 26s

d_loss: 1.3876, g_loss: 39.0920, const_loss: 0.0011, l1_loss: 30.7573, fm_loss: 0.0103, perc_loss: 7.4581, edge: 0.1724

Epoch: [ 0], Batch: [ 400/ 425] | Total Time: 15m 18s

d_loss: 1.3870, g_loss: 37.7203, const_loss: 0.0014, l1_loss: 29.8430, fm_loss: 0.0103, perc_loss: 7.0090, edge: 0.1638

--- End of Epoch 0 --- Time: 972.8s ---

LR Scheduler stepped. Current LR G: 0.000240, LR D: 0.000240

Epoch: [ 1], Batch: [ 0/ 425] | Total Time: 16m 15s

d_loss: 1.3869, g_loss: 40.1108, const_loss: 0.0015, l1_loss: 31.6669, fm_loss: 0.0103, perc_loss: 7.5646, edge: 0.1747

Epoch: [ 1], Batch: [ 100/ 425] | Total Time: 20m 4s

d_loss: 1.3872, g_loss: 37.9115, const_loss: 0.0010, l1_loss: 30.1502, fm_loss: 0.0098, perc_loss: 6.8961, edge: 0.1614

Epoch: [ 1], Batch: [ 200/ 425] | Total Time: 23m 55s

d_loss: 1.3874, g_loss: 37.9066, const_loss: 0.0012, l1_loss: 29.7076, fm_loss: 0.0091, perc_loss: 7.3347, edge: 0.1613

Epoch: [ 1], Batch: [ 300/ 425] | Total Time: 27m 44s

d_loss: 1.3869, g_loss: 37.2111, const_loss: 0.0011, l1_loss: 29.5739, fm_loss: 0.0090, perc_loss: 6.7799, edge: 0.1542

Epoch: [ 1], Batch: [ 400/ 425] | Total Time: 31m 33s

d_loss: 1.3868, g_loss: 40.8036, const_loss: 0.0012, l1_loss: 32.1219, fm_loss: 0.0101, perc_loss: 7.7870, edge: 0.1906

--- End of Epoch 1 --- Time: 975.4s ---

LR Scheduler stepped. Current LR G: 0.000239, LR D: 0.000239

Epoch: [ 2], Batch: [ 0/ 425] | Total Time: 32m 30s

d_loss: 1.3868, g_loss: 37.3369, const_loss: 0.0012, l1_loss: 29.6465, fm_loss: 0.0091, perc_loss: 6.8282, edge: 0.1589

Epoch: [ 2], Batch: [ 100/ 425] | Total Time: 36m 19s

d_loss: 1.3868, g_loss: 37.7298, const_loss: 0.0010, l1_loss: 29.8788, fm_loss: 0.0093, perc_loss: 6.9882, edge: 0.1596

Epoch: [ 2], Batch: [ 200/ 425] | Total Time: 40m 8s

d_loss: 1.3871, g_loss: 37.2137, const_loss: 0.0015, l1_loss: 29.4129, fm_loss: 0.0093, perc_loss: 6.9386, edge: 0.1585

Epoch: [ 2], Batch: [ 300/ 425] | Total Time: 43m 57s

d_loss: 1.3875, g_loss: 40.9921, const_loss: 0.0011, l1_loss: 32.3108, fm_loss: 0.0098, perc_loss: 7.8051, edge: 0.1724

Epoch: [ 2], Batch: [ 400/ 425] | Total Time: 47m 46s

d_loss: 1.3868, g_loss: 40.1596, const_loss: 0.0011, l1_loss: 31.7497, fm_loss: 0.0101, perc_loss: 7.5312, edge: 0.1747

--- End of Epoch 2 --- Time: 972.9s ---

LR Scheduler stepped. Current LR G: 0.000237, LR D: 0.000237

Epoch: [ 3], Batch: [ 0/ 425] | Total Time: 48m 43s

d_loss: 1.3872, g_loss: 38.4147, const_loss: 0.0013, l1_loss: 30.7693, fm_loss: 0.0087, perc_loss: 6.7819, edge: 0.1606

Epoch: [ 3], Batch: [ 100/ 425] | Total Time: 52m 32s

d_loss: 1.3870, g_loss: 40.7046, const_loss: 0.0015, l1_loss: 31.9569, fm_loss: 0.0104, perc_loss: 7.8653, edge: 0.1777

Epoch: [ 3], Batch: [ 200/ 425] | Total Time: 56m 21s

d_loss: 1.3868, g_loss: 38.2503, const_loss: 0.0010, l1_loss: 30.3318, fm_loss: 0.0096, perc_loss: 7.0497, edge: 0.1653

Epoch: [ 3], Batch: [ 300/ 425] | Total Time: 1h 14s

d_loss: 1.3876, g_loss: 38.3024, const_loss: 0.0010, l1_loss: 30.1309, fm_loss: 0.0094, perc_loss: 7.2896, edge: 0.1786

Epoch: [ 3], Batch: [ 400/ 425] | Total Time: 1h 4m 3s

d_loss: 1.3868, g_loss: 39.9832, const_loss: 0.0011, l1_loss: 31.5451, fm_loss: 0.0103, perc_loss: 7.5521, edge: 0.1816

--- End of Epoch 3 --- Time: 977.8s ---

LR Scheduler stepped. Current LR G: 0.000234, LR D: 0.000234

Epoch: [ 4], Batch: [ 0/ 425] | Total Time: 1h 5m 1s

d_loss: 1.3868, g_loss: 38.0624, const_loss: 0.0011, l1_loss: 30.0018, fm_loss: 0.0093, perc_loss: 7.1867, edge: 0.1706

Epoch: [ 4], Batch: [ 100/ 425] | Total Time: 1h 8m 50s

d_loss: 1.3868, g_loss: 37.5376, const_loss: 0.0012, l1_loss: 29.8460, fm_loss: 0.0080, perc_loss: 6.8375, edge: 0.1521

Epoch: [ 4], Batch: [ 200/ 425] | Total Time: 1h 12m 39s

d_loss: 1.3869, g_loss: 40.0073, const_loss: 0.0013, l1_loss: 31.5500, fm_loss: 0.0100, perc_loss: 7.5749, edge: 0.1782

Epoch: [ 4], Batch: [ 300/ 425] | Total Time: 1h 16m 30s

d_loss: 1.3868, g_loss: 38.0489, const_loss: 0.0011, l1_loss: 30.3171, fm_loss: 0.0097, perc_loss: 6.8649, edge: 0.1632

Epoch: [ 4], Batch: [ 400/ 425] | Total Time: 1h 20m 19s

d_loss: 1.3870, g_loss: 37.3342, const_loss: 0.0010, l1_loss: 29.3878, fm_loss: 0.0093, perc_loss: 7.0816, edge: 0.1616

--- End of Epoch 4 --- Time: 975.1s ---

LR Scheduler stepped. Current LR G: 0.000231, LR D: 0.000231

Epoch: [ 5], Batch: [ 0/ 425] | Total Time: 1h 21m 16s

d_loss: 1.3867, g_loss: 38.0942, const_loss: 0.0011, l1_loss: 30.2584, fm_loss: 0.0085, perc_loss: 6.9735, edge: 0.1598

Epoch: [ 5], Batch: [ 100/ 425] | Total Time: 1h 25m 5s

d_loss: 1.3868, g_loss: 34.8611, const_loss: 0.0010, l1_loss: 27.6542, fm_loss: 0.0084, perc_loss: 6.3573, edge: 0.1474

Epoch: [ 5], Batch: [ 200/ 425] | Total Time: 1h 28m 53s

d_loss: 1.3868, g_loss: 39.8917, const_loss: 0.0012, l1_loss: 31.4210, fm_loss: 0.0095, perc_loss: 7.5831, edge: 0.1841

Epoch: [ 5], Batch: [ 300/ 425] | Total Time: 1h 32m 43s

d_loss: 1.3878, g_loss: 39.2498, const_loss: 0.0009, l1_loss: 30.8915, fm_loss: 0.0094, perc_loss: 7.4744, edge: 0.1807

Epoch: [ 5], Batch: [ 400/ 425] | Total Time: 1h 36m 35s

d_loss: 1.3872, g_loss: 39.7966, const_loss: 0.0012, l1_loss: 31.3808, fm_loss: 0.0093, perc_loss: 7.5426, edge: 0.1699

--- End of Epoch 5 --- Time: 975.4s ---

LR Scheduler stepped. Current LR G: 0.000227, LR D: 0.000227

Epoch: [ 6], Batch: [ 0/ 425] | Total Time: 1h 37m 31s

d_loss: 1.3873, g_loss: 36.2315, const_loss: 0.0010, l1_loss: 28.6654, fm_loss: 0.0080, perc_loss: 6.7063, edge: 0.1579

Epoch: [ 6], Batch: [ 100/ 425] | Total Time: 1h 41m 20s

d_loss: 1.3868, g_loss: 35.1864, const_loss: 0.0010, l1_loss: 27.8280, fm_loss: 0.0079, perc_loss: 6.5170, edge: 0.1396

Epoch: [ 6], Batch: [ 200/ 425] | Total Time: 1h 45m 11s

d_loss: 1.3868, g_loss: 38.0196, const_loss: 0.0010, l1_loss: 29.9935, fm_loss: 0.0096, perc_loss: 7.1570, edge: 0.1657

Epoch: [ 6], Batch: [ 300/ 425] | Total Time: 1h 49m 0s

d_loss: 1.3869, g_loss: 35.6019, const_loss: 0.0012, l1_loss: 28.2725, fm_loss: 0.0090, perc_loss: 6.4748, edge: 0.1515

Epoch: [ 6], Batch: [ 400/ 425] | Total Time: 1h 52m 49s

d_loss: 1.3869, g_loss: 39.1795, const_loss: 0.0008, l1_loss: 30.8312, fm_loss: 0.0105, perc_loss: 7.4684, edge: 0.1757

--- End of Epoch 6 --- Time: 973.7s ---

LR Scheduler stepped. Current LR G: 0.000222, LR D: 0.000222

Epoch: [ 7], Batch: [ 0/ 425] | Total Time: 1h 53m 45s

d_loss: 1.3868, g_loss: 38.2588, const_loss: 0.0011, l1_loss: 30.5410, fm_loss: 0.0098, perc_loss: 6.8584, edge: 0.1556

Epoch: [ 7], Batch: [ 100/ 425] | Total Time: 1h 57m 35s

d_loss: 1.3869, g_loss: 37.9072, const_loss: 0.0010, l1_loss: 30.0380, fm_loss: 0.0086, perc_loss: 7.0121, edge: 0.1546

Epoch: [ 7], Batch: [ 200/ 425] | Total Time: 2h 1m 24s

d_loss: 1.3868, g_loss: 38.5386, const_loss: 0.0010, l1_loss: 30.5912, fm_loss: 0.0094, perc_loss: 7.0748, edge: 0.1694

Epoch: [ 7], Batch: [ 300/ 425] | Total Time: 2h 5m 18s

d_loss: 1.3868, g_loss: 38.4329, const_loss: 0.0009, l1_loss: 30.5603, fm_loss: 0.0089, perc_loss: 7.0009, edge: 0.1691

Epoch: [ 7], Batch: [ 400/ 425] | Total Time: 2h 9m 13s

d_loss: 1.3867, g_loss: 36.0851, const_loss: 0.0012, l1_loss: 28.3616, fm_loss: 0.0081, perc_loss: 6.8651, edge: 0.1562

--- End of Epoch 7 --- Time: 985.7s ---

LR Scheduler stepped. Current LR G: 0.000217, LR D: 0.000217

Epoch: [ 8], Batch: [ 0/ 425] | Total Time: 2h 10m 11s

d_loss: 1.3868, g_loss: 38.4818, const_loss: 0.0010, l1_loss: 30.4990, fm_loss: 0.0089, perc_loss: 7.1142, edge: 0.1659

Epoch: [ 8], Batch: [ 100/ 425] | Total Time: 2h 13m 59s

d_loss: 1.3867, g_loss: 37.6523, const_loss: 0.0010, l1_loss: 30.0526, fm_loss: 0.0087, perc_loss: 6.7452, edge: 0.1519

Epoch: [ 8], Batch: [ 200/ 425] | Total Time: 2h 17m 49s

d_loss: 1.3870, g_loss: 37.9076, const_loss: 0.0009, l1_loss: 29.9917, fm_loss: 0.0088, perc_loss: 7.0699, edge: 0.1434